Get into Game Dev:

cheat-codes for gamedev interviews

|

Welcome to Get into Game Dev: cheat-codes for game interviews!

My name is Matthew Ventures and I'm a professional game developer with a passion for teaching/helping others! This book is designed for novice developers to help them pass their interviews and for experienced developers who wish to brush up on their skills by reviewing topics or going through the practice questions. This guide is fool-proof! I would know, because it worked for me and I am often-times foolish. Below are some of the teams I have had the privilege of working with. In the years this guide has been available, dozens of developers have reached out to me over email and LinkedIn to share how it helped. Checkout some of their testimonials below! I'm confident that if you're willing to put in the time studying, this guide can help you as well. Let's get started! |

Introduction

Before You Start

If you're struggling to land an interview or you're a student pursuing internships, I wrote a book dedicated specifically to breaking into the gamedev industry which you can read for free here. Additionally, if you're completely new to game development, I wrote a course while teaching at Stanford that you can view here. I'd recommend going through those assignments before jumping into the the more advanced topics we cover in this book.

I'm a gameplay engineer which means I do work on both code and design, this book includes both topics. This book was inspired by Cracking the Coding Interview, that's a great book to read first if you are not familiar with the format of general coding interviews. However, "gamedev" interviews cover somewhat different topics including math, memory, and optimization which is where this book provides a unique value for you. Please make sure to complete each chapter's practice questions to verify your understanding, and ask questions in the comments if you need help. Good luck!

I'm a gameplay engineer which means I do work on both code and design, this book includes both topics. This book was inspired by Cracking the Coding Interview, that's a great book to read first if you are not familiar with the format of general coding interviews. However, "gamedev" interviews cover somewhat different topics including math, memory, and optimization which is where this book provides a unique value for you. Please make sure to complete each chapter's practice questions to verify your understanding, and ask questions in the comments if you need help. Good luck!

Testimonials

"I just wanted to follow up and thank you for your advice and all the time you put into helping students break into the game industry. Thanks to your help and advice, I was able to land an internship as a gameplay engineer at Blizzard on the Diablo IV team."

— Zakarya Zahhal, 2023

"I recently experienced a studio closure and had to start the hunt for a new job with no warning. 'Get into Game Dev' by Matthew Ventures proved invaluable in my job hunt. Even as a senior engineer with nearly a decade in the game industry, this guide helped refresh my knowledge, especially when it came to C++ and 3D math. Both those new to game development and more experienced folk can get a lot out of it!"

— Thomas Klovert, 2023

" Your guide was invaluable! All of the questions during my final interviews were straight from your guide! It was unbelievably helpful! I would absolutely recommend it to any other aspiring game devs."

— Avik Shenoy, 2023

" I just wanted to say I super appreciate all the work you've put into the Get Into Game Dev book and your YouTube. I've been programming for a long time now, but self-taught, and there's a lot of things I can do but wouldn't use the right terminology when discussing with other coders. This seems like a great way for me to work on that :) Thanks again!"

— Ian Snyder, 2023

"11 out of 10. It taught me information not explicitly told in my programming classes and provided me with incredibly valuable insight."

— Frazier Kyle, 2024

"I wanted to let you know that your course helped me with the id Software UI Programming interview. I made it through all of the rounds including the ‘onsite’."

— Zach Cook, 2024

"Recently bought your book and it's been incredibly helpful so far. I'm still in the process of putting a portfolio/resume together, but once I (hopefully) get an interview, I know I'll be very prepared. :)"

— John Isril, 2024

— Zakarya Zahhal, 2023

"I recently experienced a studio closure and had to start the hunt for a new job with no warning. 'Get into Game Dev' by Matthew Ventures proved invaluable in my job hunt. Even as a senior engineer with nearly a decade in the game industry, this guide helped refresh my knowledge, especially when it came to C++ and 3D math. Both those new to game development and more experienced folk can get a lot out of it!"

— Thomas Klovert, 2023

" Your guide was invaluable! All of the questions during my final interviews were straight from your guide! It was unbelievably helpful! I would absolutely recommend it to any other aspiring game devs."

— Avik Shenoy, 2023

" I just wanted to say I super appreciate all the work you've put into the Get Into Game Dev book and your YouTube. I've been programming for a long time now, but self-taught, and there's a lot of things I can do but wouldn't use the right terminology when discussing with other coders. This seems like a great way for me to work on that :) Thanks again!"

— Ian Snyder, 2023

"11 out of 10. It taught me information not explicitly told in my programming classes and provided me with incredibly valuable insight."

— Frazier Kyle, 2024

"I wanted to let you know that your course helped me with the id Software UI Programming interview. I made it through all of the rounds including the ‘onsite’."

— Zach Cook, 2024

"Recently bought your book and it's been incredibly helpful so far. I'm still in the process of putting a portfolio/resume together, but once I (hopefully) get an interview, I know I'll be very prepared. :)"

— John Isril, 2024

Purchase Options

|

Some chapters will be locked, requiring a password that will be provided on purchase. Other chapters will be provided for free, to give you a fair sample of the content prior to your purchase. All proceeds from your purchase will go to charity to the Against Malaria Foundation to help fight malaria.

|

Offer |

Cost |

📘 Free Chapters of GIGD Book (available below) 📘 |

Free (access below) |

📖 Full GIGD Book (all-access) 📖 |

|

🧑🏻🤝🧑🏻 30-minute private 1-on-1 candid chat 🧑🏻🤝🧑🏻 - Ask about career advice, portfolio setup, resume design, etc. |

95 USD (schedule by email) |

👩🏻💻 50-minute private 1-on-1 practice interview 👩🏻💻 - Customized for the exact studio you are applying to - Includes questions previously asked by that studio |

|

👨🏻🎓 60-minute public candid chat for student groups 👨🏻🎓 - Discuss game dev, portfolio/resume design, internships, etc . - Students only: highschool game dev clubs, college teams, etc. - Interview will be shared on YouTube to help others as well |

Free (schedule by email) |

🧙🏼♂️ Career Coaching 🧙🏼♂️ - Full re-design and critique of resume and portfolio - Multiple 1-on-1 practice interviews - Personalized referrals and industry contact introductions |

695 USD (schedule by email) |

📞 Quick Inquiry Hotline 📞 - Quick answers for succinct game dev career questions |

Free (contact [email protected]) |

🗒️Resume Review 🗒️ |

|

🫂Donate directly to the Against Malaria Foundation🫂 |

Table of Contents

|

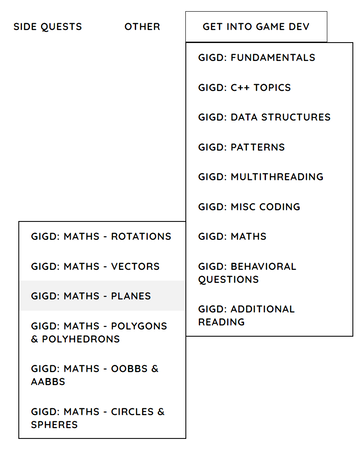

For navigation, please hover over the "Get Into Game Dev" button at the top-right of the screen, then select chapters from the drop down menu that appears (as shown in the image below). The site is rather slow so it may take about 10 seconds to load the webpage you select.

|